-

Posts

350 -

Joined

-

Last visited

-

Days Won

5

Content Type

Forums

Gallery

Store

Posts posted by borjam

-

-

Well, maybe that Zaxnet system is way too naive. That approach can work in regulated spectrum with all the players following similar rules. But in ISM bands where several radically different schemes can be used… Remember that Deity mentioned a clever mechanism to advise WiFi equipment around to leave free radio time. How old is Zaxnet anyway?

Also there might be other problems. How is its front-end filtering? I mean, despite using one end of the available band, how would it resist off channel interference?

But on ISM bands your transmission system should be designed with the assumption that it will have to coexist. And actually I am probably wrong about the 2.4 GHz band. Maybe it has gone through a sort of transition period with less pollution. But now wireless headphones and other 2.4 GHz devices are on the rise, not being WiFi.

So my assumption is, indeed, likely inaccurate. I was only thinking about WiFi, sorry.

-

Yes, the 6 GHz WiFi band is more or less the same (radio wise) as the 5.8 GHz band.

The advantages are:

- There is much more bandwidth than at 2.4 GHz.

- It is less tolerant to obstacles.

The second may be shocking for some, but it is a real advantage. Whenever I give a talk about WiFi networks to customers I use to point the main mistake made in many WiFi deployments. Whenever I hear something like "I use high power network gear, I can connect even from the other side of the parking lot!" I answer "You botched it!".

Why? Because WiFi equipment adapt to network conditions. When your signal is great, equipment chooses the high throughput signal codings. With poor conditions they fall back to the slowest modes which can work with marginal radio signal. So, yes, it connects from the parking lot!. What many people fail to grasp is, there is a precious resource in your wireless network and it's called "time". If you have a far away use connected with a low performance mode, it will mean it is using a lot of "on the air" time, which means it will slow down all the "cell" (users of the same access point).

So why is 5-6 GHz better? It makes you increase the access point density in order to cover a wide area, and it will help prevent distant users from connecting to the wrong AP because of the higher attenuation.

Also, less obstacle tolerance means less interference from neighbors. So it can be a blessing

Anyway, with WiFi users migrating to 5 GHz whenever possible, the 2.4 GHz band should be less polluted now than it was several years ago. Except of course for other equipment such as follow focus, video transmitters, etc.

As I told a customer the other day: The two kinds of wireless hells on Earth are schools and movie sets

As for 5G, it can use several different bands. The lowest frequencies (700 MHz) have a much better wall penetration so it should work pretty well inside buildings, while there are provisions for high density deployments on 2.6 or even 26 GHz which would be adequate for places such as sports stadiums.

-

5 hours ago, The Documentary Sound Guy said:

Yeah, spread spectrum was one of the things I had in mind. I've been aware of the 2.4GHz improvements in consumer electronics, and haven't seen those innovations spread to our professional gear. I would give it a look.

Properly implemented spread spectrum can be really robust. Of course it can be vulnerable to receiver overload, but the legal limits on transmission power should avoid that.

5 hours ago, The Documentary Sound Guy said:

That being said, my skepticism remains. Even with better reception techniques, it's still unregulated spectrum, and spread spectrum techniques don't change the fundamental physical constraints of microwave frequencies: poorer barrier penetration and higher absorption by human bodies.Of course there is no magic for that, although some spread spectrum schemes using also FEC (forward Error Correction) like LoRaWan can achieve unbelievable results. I am not suggesting they are using LoRa (it's a low data rate modulation, so impossible), but that modern modulation techniques can be amazing.

But at the cost of latency if using FEC. Note that it includes timecode, they claim. That means they can cope with more latency and still the audio files will be usable, you can think.

5 hours ago, The Documentary Sound Guy said:

Even if spread spectrum can work well with once device, what happens when you have a dozen transmitters (all of which will frequency hop and raise the noise floor with each other)? How will they interact with all the other devices on set (in particular Teradeks and lighting control, not to mention crew WiFi)? What happens in a dense urban location where there are dozens or hundreds of WiFi networks, all competing for the same spectrum?There is a limit to that of course, but (assuming it is frequency hopping) if the hops are really random they shoudln't expect many collisions.

As for other devices, it depends. If using frequency hopping I am wondering about the front end filtering of the chips they employ.

5 hours ago, The Documentary Sound Guy said:

Will all the devices continue to reliably pass time-critical audio streams with fixed and low latency? My understanding is that part of the reason consumer devices work as well as they do in 2.4GHz is because they use packet switched protocols where lost packets can be re-sent and latency can be variable and isn't super critical. A real-time, synchronous data stream is harder to send with the same quality of service guarantee than a packet switched one.I guess they will keep latency kinda under control maybe having the equipment make decisions about interference avoidance. This is old tech, even the Telebit Trailblazer modems of the 80's did something like that over telephone lines. Instead of the classic approach of one carrier, they used many low bandwidth carriers and if error rate was high for some carriers their frequencies were blacklisted if I remember well.

40 minutes ago, Paul F said:I suspect wireless microphone manufacturers that offer 2.4G products don't develop their own modulation techniques. Wifi and Bluetooth live in the 2.4 and 5G bands and soon 6G. They have worked very hard for all these years improving the technology in competition and as a consortium. I just can't imagine that a wireless mic company has the where-with-all to do any better than the people that are making billions off the wifi and bluetooth market, which includes audio products such as earbuds, headphones, etc; companies like Broadcom. I suspect wireless microphone companies buy the chips from vendors like Broadcom. The latest release of wifi offers 12ms latency.

As I said above, I imagine they are using some off the shelf chipset that implements that. I have hints because a customer in a completely unrelated field is developing some spread spectrum transmission system and I am pretty sure they aren't developing modulation schemes.

24 minutes ago, The Documentary Sound Guy said:That makes sense. I would suspect that as well. My question is how suitable are those chips for professional audio use?

I guess good enough for many modest, prosumer equipment level but I am pretty sure they are not trying to compete with the high end professional stuff all of you use. Only the RF front ends must be really expensive.

My brother, who works for a stage equipment company, told me that he was sure that Lectrosonics cheated with power levels because he couldn't understand how they manage to work otherwise

I had to explain him that, well, that's not possible and, well, not all RF circuits are created equal!

24 minutes ago, The Documentary Sound Guy said:

I had to explain him that, well, that's not possible and, well, not all RF circuits are created equal!

24 minutes ago, The Documentary Sound Guy said:

Is WiFi latency directly comparable to the latency an in-house modulation?WiFi imposes several limitations, including collision avoidance measures if you are intending to send large packets. Also it doesn't have an arbitration protocol. Maybe they are using WiFi chipsets with a kinda tailored implementation of the protocols? But I doubt it.

If you develop your own scheme you can make important decisions so that you can compromise between latency, error correction, total channel capacity, etc, with more flexibility.

24 minutes ago, The Documentary Sound Guy said:

I would tend to assume that latency in WiFi would mean 12ms for packet delivery, and if a packet is missed, it would have to be re-sent, which would mean a buffer is needed to smooth out packet drops. So ... minimum 24ms (first send + retry), plus some processing time for the acknowledgement to time out and a random seed to prevent simultaneous transmission. That very quickly adds up to a frame or more.There is buffering also just because you are packing together a bunch of samples into a packet and computing an error detection/correction

code. So, latency.

24 minutes ago, The Documentary Sound Guy said:

I know WiFi is a physical layer, so maybe it's not packet switched in itself? Can it offer better QoS if it's not broadcasting TCP on top?WiFi sends packets of course. You can say that access points do the packet switching. And the use cases for TCP and UDP are different. UDP is mostly appropiate if your priority is real time (say, a journalist live broadcasting via satellite link) and you can tolerate a glitch or two. TCP, with congestion control and error detection and retransmission offers data integrity but it will sacrifice latency.

It can be a middle ground solution anyway.

24 minutes ago, The Documentary Sound Guy said:

Anyway, all I have are questions that nobody here can answer, and we won't know the answers until we see some of these in the field, so I guess I'll sit and we'll see if my skepticism can be assuaged.I think Rode are no fools. As a mater of fact three years ago the marketing dept wanted to buy a wireless microphone to record some videos at trade shows. They were going to buy some utterly crappy stuff and I convinced them to, as a bare minimum, buy a Rode set. So far it has worked even in very busy trade shows with WiFi activity all over the place.

Typical Rode fashion I imagine it will be very good equipment for the money but surely no match for the established high end names.

-

14 hours ago, The Documentary Sound Guy said:

It's 2.4GHz. Unless they've done something truly revolutionary with antennas or in their Rx algorithm, it's going to have trouble in urban environments. I can get 260m of range from my 2.4GHz Zaxnet IFBs ... if I take them into the mountains with no interference and clear line of sight. In practice, they struggle with dropouts at 20 feet. That system was engineered over a decade ago, and 2.4GHz tech has changed a lot, but until proven otherwise, I don't have faith that a 2.4GHz system is anywhere near reliable enough for produciton use.

Maybe they are using some spread spectrum modulation that, as long as the receiver is not saturated by a really strong signal (such as a nearby microwave oven) can be unbeliavably resilient. I have heard of some frequency hopping applications working on 2.4 GHz that, I guess, are based on (maybe?) some new chipset implementing implementing it.

For certain applications spread spectrum can seem like magic. And with digital signal processing power becoming so affordable and energy efficient you can use techniques that were unthinkable several years ago.

-

-

5 hours ago, tourtelot said:

You all know of course that this is a problem of Apple's own making. Actually fries my ass, the more than a few years old Apple design path.

This is only one of the reasons that I continue to run my large Dante rig on a pair of 2012 MBPs. You know? The ones with all the proper ports right on the machine?

Indeed, Apple have been quite stupid (in my opinion). They should keep an up to date proper Ethernet interface. Maybe not cheap but at least with a minimum expectation of being solid.

I can imagine countless situations in which someone goes to record a concert with a $4000 laptop and a $25 crappy Ethernet adapter only to find that it won't work while others (maybe with built in Ethernet port) are capable of doing it.

Dodgy hardware can damage reputation more than non existant products. And I got the Belkin adapter from the Apple Store together with the laptop. it was the recommended model.

At least the hub I have just tried is not terrible, but it was a bit more troublesome to set up than the TB-GbE. And it probably means that other dongles based on the same chipset should work.

One more test.

With the Thunderbolt adapter running a full duplex iperf3, kernel CPU consumption was about 44% with full bandwidth.

With the Ugreen hub, 70 - 75% CPU, good performance. Not sure about its buffering strategy, it took some seconds to reach full bandwith while the Thunderbolt was almost instantly topping it.

With the crappy Belkin, 92% CPU (!!!!) and appalling performance. (

[ ID][Role] Interval Transfer Bitrate

[ 5][TX-C] 0.00-30.00 sec 2.75 GBytes 787 Mbits/sec sender

[ 5][TX-C] 0.00-30.00 sec 2.75 GBytes 787 Mbits/sec receiver

[ 7][RX-C] 0.00-30.00 sec 1.11 GBytes 319 Mbits/sec sender

[ 7][RX-C] 0.00-30.00 sec 1.11 GBytes 319 Mbits/sec receiver

As far as I know that is not 44% (or 72%) of the whole CPU power, but of one core.

-

@Vincent R. It works, it seems.

That said, when moving it from one USB-C port to another I was forced to delete the interface and recreate it. Otherwise it was unable to achieve clock lock.I have made the test connected to a 2010 Mac Pro running Mojave through a direct cable, dedicated interface (no switches messing around).

Latency is good. iperf throughput is as it should.

But it was trickier to make it work, being USB-C. Curiously latency with the Thunderbolt adapter is a bit higher. But seems to be more solid. I mean, I had to do some voodoo macumba in order to make the hub work, deleting the interface and recreating again when I changed USB ports. Otherwise it would not achieve lock.

-

Yes, it is that one. But let me try actually with Dante this evening/night and I will let you know.

Anyway, remember that despite the additional dongle Thunderbolt will always be better than USB-C latency wise.

I will put it to work with Dante this evening and check how latency goes compared to the old Thunderbolt to GbE adapter.

By the way, the Apple Thunderbolt to GbE adapter supports AVB extensions and it allows you to fine tune the connection mode enabling or disabling flow control. The others

(either Realtek or AX) do not offer that.

-

I have tried a different device. This is a UGreen adapter that includes HDMI, a USB-C port, two USB-A ports and Gigabit Ethernet.

The chipset is identifed as AX88179A, and the iperf3 test looks good as well.

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID][Role] Interval Transfer Bitrate Retr

[ 5][TX-C] 0.00-600.00 sec 58.3 GBytes 834 Mbits/sec sender

[ 5][TX-C] 0.00-599.96 sec 58.3 GBytes 834 Mbits/sec receiver

[ 7][RX-C] 0.00-600.00 sec 63.8 GBytes 913 Mbits/sec 78 sender

[ 7][RX-C] 0.00-599.96 sec 63.8 GBytes 913 Mbits/sec receiver

A bit more packet loss than the Thunderbolt adapter but not bad anyway.

-

On 7/14/2023 at 10:18 PM, Patrick Farrell said:

Very interesting topic. I think Realtek used to publish a driver for macOS, though not sure it's compatible with the latest versions. Have you investigated using a non-default driver? Also interested how the D-Link adapter performs. It was a sad day when Apple stopped including an ethernet port on their laptops.

The driver currently used on Macos Ventura is made by Apple, it seems. I will try to look for other alternatives, but using a third party driver can be problematic in case you update the operating system itself.

I think I read somewhere (but there is much fuzzy stuff on "thah intarwebs") that Realteks were problematic until Apple made their driver. However, the problems I have observed are happening with the Apple provided builtin driver in Macos.

Amazon delayed the order and I cancelled it. Anyway it seems that D-Link contains a Realtek chip as well. A more advanced one I think. I will still try it eventually and report here.

Speaking to Lindy (their representative in Spain was so kind to call back responding to my feedback) there is a model that might work, based on a different chipset.

But we still don't know the cause of the problem I saw. It can be either (or all) of these:

- Poor driver

- Poorly designed chipset (it can affect driver design enormously)

- Using USB-C: Remeber that the good Apple adapter is Thunderbolt, which means it is attached to PCIe which offers better throughput, direct memory access if necessary and lower latency.

I'll keep you posted!

-

Have you tried making the measurements at an entirely different location? I am wondering whether local interference is messing

with the measurement, either through the cable or the meter itself.

If you have ferrite cores you can also try to put them around the coaxial cable and see if they make a difference. That would point to a

cabling problem (or a severe impedance mismatch at the meter input).

-

Yes, I am sure you can record over a Belkin adapter. Although in my opinion it´s unacceptable to lose packets at such a low bandwidth in 2023. It was 10 years ago!

And what really worries me is, sometimes negligible defficiencies can add up in unexpected ways and ruin your day with difficult to debug problems. So it is always better to make sure that each and every element performs like it should.So, do they compare in the real, Dante Inferno? I think so.

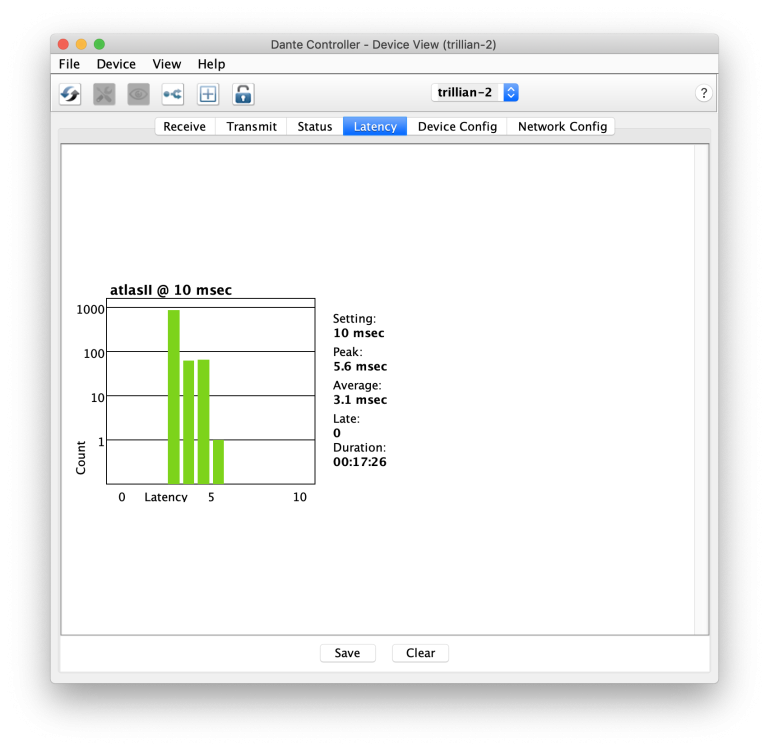

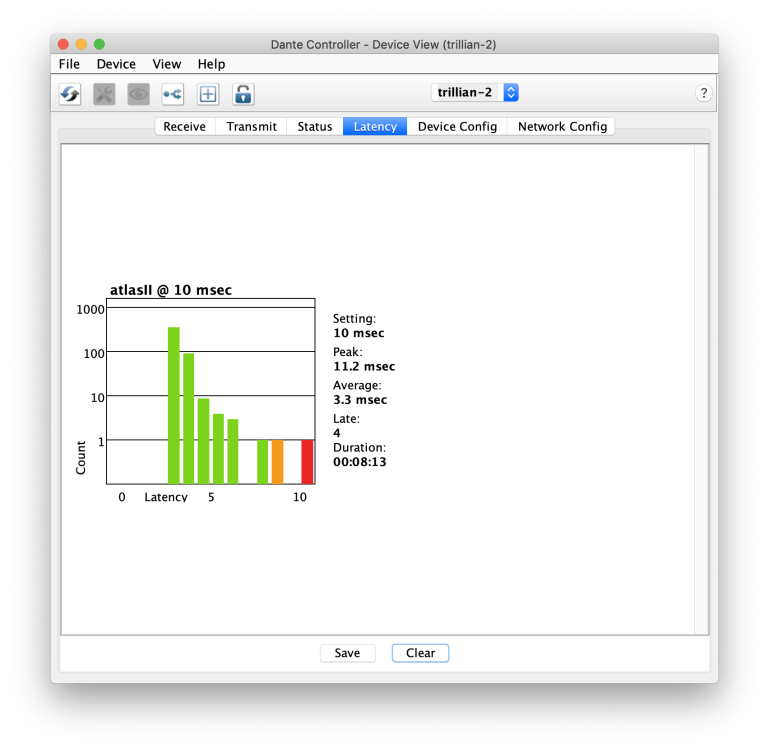

I have made a latency comparison.

Audio source:

- 6-core 2010 Mac Pro @3.33 GHz running 10.14.6.

- iTunes playing audio to a Metric Halo ULN-2 interface, interface looping back audio (otherwise iTunes won´t allow Dante Via to grab it)

Audio destination:

- 16 inch Macbook Pro M1-Max running 13.4.1 with the latest security update (c)

- Audio aggregate device with a 16 track Dante Virtual Interface and a Sound Devices MixPre 3 (I need to inject timecode to my recording)

- Digital Performer 11.22

And sending 16 tracks (two of them actual audio, the rest silence) which means around 20 Mbps.

Two network configurations:

Belkin adapter (via hardware configuration it seems they claim 5 Gbps!) in "auto" mode (doesn´t allow to tune flow control, etc anyway)

Apple Thunderbolt to gbE via Apple TB3->TB2 dongle

I have been recording 17 tracks on DP (16 from Dante, one from the MixPre with the TentacleSync LTC) and while recording I have locked

the screen a couple of times, switched to a different application (like Terminal) and I have also stopped the recording, deleted all the

recorded audio, compacted project.

Look at the latency graphs from Dante Controller running on the Mac Pro. I think they speak for themselves. The peak latency events occured when I locked the screen and unlocked it. The Belkin adapter had actual trouble, which is shocking given that these new ARM based machines are actual supercomputers with an unbelievable memory bandwidth.

(Not trying to be pedantic especially given that computers and operating systems pay my bills, not audio, but I think all of you should know!)

-

Just thought everyone here would be interested. I got a M1 Max 16" Macbook Pro when they were launched. Although at home I mostly use WiFi I got a Gigabit Ethernet adapter just in case. It's always useful to have one around, especially nowadays when you might need to use Dante or other Ethernet audio transport.

TL;DR: Buy the Thunderbolt 3 to Thunderbolt 2 adapter and the Thunderbolt 2 to Gigabit Ethernet while supplies last!

There are other alternatives in the market (A Sonnet adapter using the same Broadcom chip as the Apple Thunderbult-GbE costs as much as $200), and

out of curiosity I have ordered a D-Link adapter which is claimed to have a non-Realtek, much better controller. I will report back when it arrives.

The shocking thing is, I stumbled upon some advice from BJ Buchalter (Metric Halo) recommending to use a dongle processionary (Thunderbolt 3 to Thunderbolt 2 adapter chained with a Thunderbolt 2 to Gigabit Ethernet one) instead of the usual hardware sold by Apple themselves. In my case, a Belkin adapter.

I have used Dante very little and I need to record a concert on Sunday, so of course I did my homework testing my Dante configuration, etc, in order to avoid pestering the FOH guy with stupid debugging time.

I was a bit shocked, the timing was more jittery than I remembered. So just in case I ordered the Apple "processionary". It arrived today.

Doing just a simple network performance test called, "iperf" these are the results:

10 minutes of bidirectional iperf3 test (TCP) on a clean GbE network, two ARUBA 2530 switches, to a HP server running FreeBSD 13.2

and Broadcom GbE interfaces.bge0: <Broadcom BCM5720 A0, ASIC rev. 0x5720000>

The processor is a Xeon e3-1260L at 2.4 GHz.

Belkin adapter:

[ ID][Role] Interval Transfer Bitrate Retr [ 5][TX-C] 0.00-600.00 sec 41.6 GBytes 596 Mbits/sec sender [ 5][TX-C] 0.00-600.00 sec 41.6 GBytes 596 Mbits/sec receiver [ 7][RX-C] 0.00-600.00 sec 21.6 GBytes 310 Mbits/sec 24422 sender [ 7][RX-C] 0.00-600.00 sec 21.6 GBytes 309 Mbits/sec receiver

Apple processionary:[ ID][Role] Interval Transfer Bitrate Retr [ 5][TX-C] 0.00-600.00 sec 58.0 GBytes 830 Mbits/sec sender [ 5][TX-C] 0.00-600.00 sec 58.0 GBytes 830 Mbits/sec receiver [ 7][RX-C] 0.00-600.00 sec 63.8 GBytes 914 Mbits/sec 4 sender [ 7][RX-C] 0.00-600.00 sec 63.8 GBytes 914 Mbits/sec receiver

So, indeed, outrageous! Either the Realtek chip is extremely poor (I was told there was even profanity in the code comments

of a Realtek driver for FreeBSD) and I have indeed had horrible problems with them for other applications, and/or the

USB-C subsystem is really poorly designed (the Apple interface is connected to PCIe) and/or the Realtek driver is awful.Still, it feels like a high performance processor like a M1 Max should be able to cope with a mere gigabit without a hitch. The

Xeon inside the server was launched in Q2’11 (THIRTEEN YEARS OLD TECHNOLOGY!).The Apple adapter lost FOUR packets in 10 minutes. The Belkin/Realtek TWENTY FOUR THOUSAND FOUR HUNDRED

TWENTY TWO!I just can’t understand why Apple doesn’t bother to release proper hardware. Charge me $50 or even $100 if they want, but

I demand properly working equipment instead of selling useless crap on their own online store.

-

Well, those are very different measurements!

For an accurate output power measurement you would use a RF power meter connected to the transmitter output. What is the "correct" output power can be a tricky question though. If, for example, you are using an antenna with gain your actual output power should be lower in order to avoid violating ERP (effective radiated power) limits. Or you can (extreme care here!!) use a spectrum analyser together with a properly configured chain of known attenuators.

A spectrum analyser also allows you to check a modulated signal in order to detect spurious signals, etc. Some even include specialised modulation analysis software.

For other measurements such as insertion loss, frequency response (important for filters!) etc the most usual instruments are either a spectrum analyser with a tracking generator (which is a RF generator which frequency is synchronised with the spectrum analyser window) or a SNA/VNA (scalar or vector network analyser). The latter instruments (especially the VNA) also allow you to check impedance matching.

Which gadget did you get?

Stuff such as VNAs or spectrum analysers used to be extremely expensive but nowadays there are cheap alternatives good enough for many purposes. For example RF Explorer is very well known in this forum.

There are also some SNA or VNA units sold mostly for radio amateurs as "antenna analysers" at prices below 500 euro. And if you want to get more serious Siglent (a Chinese manufacturer who works together with LeCroy) have released surprisingly capable instruments at an affordable price. Even Rhode&Schwarz have followed suite.

-

4 hours ago, r.paterson said:

Mics are assembled in what is currently the factory in Stroud UK soon to be closed , circuit boards etc all manufactured by rycote the capsule is 17mm and must be from another manufacturer but its a seceret as who manufacturers the capsule the capsule response is as per the specs smooth no bumps so pro spec, I wondered if German maybe mbho? .

I stand corrected then.

-

On 12/16/2022 at 12:01 AM, Peter Mega said:

https://www.netgear.com/au/business/wired/switches/fully-managed/m4250/avline/

These switches are designed for AV use. Select use for Dante and it will configure the port accordingly. It’s not 12v DC but these are designed for what we want as far configuration goes.

Then that one looks like a great solution.

I always insist on managed switches because I have seen so many paranormal phenomena in my life. From negotiation mismatches to cabling problems that cause a bit of packet loss, including switches with "intelligent" switch port configuration that get confused when connected to equipment the designers did not expect, stupid multicast/broadcast "storm control" you can´t disable... You name it.

The problem with unmanaged switches is, if you hit a corner case that the designers didn´t consider, you are busted.

-

On 12/17/2022 at 7:26 PM, Spin360 said:

(...)they started making microphones, Timo left Rycote, their support took a few months to pick up where he left...

Are they really making microphones? Or are they selling some OEM stuff from China?It is clear I was wrong.I remember when Marantz Pro suddenly "sprouted" a really diverse line up of products including microphones, mountings, a zeppelin that looks too similar to the Rode Blimp basket (I would guess Rode outsources the basked from and add their own lyre based suspension), while the latest iteration of the PMD661 (mkiii) came out with inferior microphone input noise specifications (according to the Avisoft tests).

-

17 hours ago, Paul F said:

I expected the same news to come from Sound Devices by now since it was sold off to a holding company much like Vitec. Shut the place down. Move it somewhere else to consolidate. Find cost savings in every corner. But, it has been just over a year. Maybe they will get lucky.

Is it just me or the group that acquired Sound Devices is very different from Vitec or Harman? Harman essentially axed AKG after being bought by Samsung, for instance.

-

2 hours ago, LuisT said:

borjam do you have any reference like the trendnet that takes 12V DC IN and cold resistant for outstide shoots but as a managed switch? :

https://www.amazon.fr/TRENDnet-6-Port-Hardened-Industrial-Giga/dp/B01MQXZWLY

I have looked at some options and (warning, I haven't tried it!) the Tripp Lite/Eaton line of switches seems to tick all the boxes. Moreover it is very tolerant of input voltage.

Model NGI-M08C4-L2

There is come contradicting information though: The datasheet mentions Energy Efficient Ethernet, while the manual makes absolutely no mention of it. It is not even listed in the specifications and there is no command to enable/disable it. On industrial equipment it makes more sense not to support it because some exotic industrial kit can fail in surprising ways when presented with up to date equipment.

I would ask them anyway. The support for fiber ports is great in case you need to extend it. With single mode fiber you can extend Gigabit Ethernet to 2 Km and the cable is very light. Of course it's impervous to electromagnetic interference.

Anyway I am asking HP/Aruba. We have a good relationship with them and you can likely find some second hand older models at very good prices. I remember I tried Dante with the 2530 series and it ticked all the boxes.

-

The safe bet is a properly configured managed switch.

Unmanaged ones can be a hit or miss. If it works, fine. But unmanaged switches make it impossible to diagnose

"subtle" problems such as packed drops caused by failing ports, damaged or improperly wired cables...

I know managed switches are much more expensive, but compared to the cost of pro recorders, microphones, etc,

they are still peanuts.

Just my 2 cents!

-

On 11/12/2022 at 5:52 AM, Paul F said:

I don't see anything in the documentation that says the BP-TRX has diversity receiver capability, which would mean there would be no reason to use the microphone as an antenna. Besides, you are using the BP-TRX as a transmitter, in which case, it would use only one antenna.

In a system like the BP-TRX, the receiver is actually a transceiver because it's sending feedback information to the transmitter.

On 11/12/2022 at 5:52 AM, Paul F said:RF stuff is so freaky, who knows what the microphone might have to do with it*. Maybe the RF off the antenna is coupling into the mic input better with the Tram. Try coiling it up into a tight coil and see if that effects it. That might indicate if the mic cable is a problem or just a coincidence that it performs better without the Tram.

It must be a problem in the analog domain. These units transmit digital audio, which means that RF interference would cause drops. Maybe RF interference to the microphone, as you say, is causing this. I would try to coil the microphone cable around a small ferrite bead, a couple of turns or so. If that works it would mean you found where the problem is.

It doesn't need to be 2.4 GHz. The interfering signal affecting the microphone could be in a different band.

Speaking of the 2.4 GHz band, and this is a long shot, look for alarm movement sensors. Some older ones transmit a strong signal on the 2.4 band, although more modern units work at 10 GHz.

-

On 11/11/2022 at 7:08 PM, PCMsoundie said:

Audinate says:

"Digital audio requires synchronization for accurate playback of audio samples. Dante uses Precision Time Protocol (PTP version 1, IEEE 1588-2002) by default for time synchronization. This generates a few small packets, a few times per second. One clock leader is elected on a per subnet basis that sends multicast sync and follow up messages to all followers. Follower devices send delay requests back to the leader to determine network delay."Here is the update on that protocol:

Gotham Sound did a Dante setup for multitrack audio for location sound in a Youtube Video and found that the PTP in Dante was still unreliable and they needed to use Wordclock for reliability over say 18 hours.

For Digital audio the gold standard for staying in sync between multiple digital audio devices is wordclock.

The main problem with Dante clock sync is, I am pretty sure, the appalling state of the computer industry and, hence, most of the networking products.

You can't imagine how much disregard there is for standards, let alone bugs implementing complicated specifications for seldom used protocols.

That protocol is based on multicast, which performance can vary widely.

On 11/12/2022 at 7:02 AM, The Documentary Sound Guy said:Interesting article, thanks!

Unfortunately, it's not written with enough audio knowledge to fully address the problems we were exploring in this thread.I'm intrigued by the idea of using synchronized internet time as a timecode source for all media, but I'm very much unconvinced it can serve the purpose that syncing word clocks serves. It touts that "millisecond" precision would solve the "not narrow enough resolution" issue (i.e. timecode only represents frame-labels, not sample labels), but uniquely labelling audio at the sample level can't be done with millisecond precision — microsecond precision is required. I'm not sure how precise internet time sync is, but given that IP latency is measured in milliseconds (and latency is variable packet to packet), I very much doubt it provides adequate precision for sample-accuracy. It's also highly unlikely they would stay in sync with millisecond precision for very long without re-jamming, which just brings us back to all the problems we have been discussing here, namely, how long will two clocks maintain precision before they drift.

Internet time synchronization (the NTP protocol) uses statistics to achieve sub millisecond accuracy. In proper implementations it does it without "jumps", by carefully adjusting the clock frequency so that time is a monotonic funtion (I mean, each and every second exists, it won't adjust a clock sledgehammer style changing the second number).

Still, hardware accuracy matters. If you don't have a good TCXO NTP will not make miracles although sometimes it really looks like magic.

These techniques can be also used with a GPS clock reference which benefits from the accuracy of the GPS satellite clock.

On 11/12/2022 at 7:02 AM, The Documentary Sound Guy said:Maintaining timecode with millisecond or microsecond precision based on a common internet source sounds like a great idea from a post workflow point of view, but it sounds like an engineering nightmare to implement with the promised accuracy if it is possible at all.

On 11/12/2022 at 7:02 AM, The Documentary Sound Guy said:I did appreciate that the article succinctly spelled out the problem I was trying to solve when I started this thread (and still don't have a complete answer for) under the heading "The Problem of Size". Namely, a timecode frame is ~2,000 samples long, which leaves it unclear how sample-level precision can be achieved with timecode sync, since a timecode stamp only identifies a sample to within +/- ~2,000 samples. Word clock can make sure that clocks don't drift, but because the wordclock signal doesn't carry *label* information, it can make sure that samples are the same length, but there is no mechanism that can identify two samples on different recorders as representing the same point in time.

Maybe labelling each and every sample is overkill and it's better to focus on avoiding clock drift in the first place anyway. If you have an accurate label

for the first sample in a video frame and clock drift it really small, well, we can say you nailed it.

For a nice application of wide area clock synchronization for non audio purposes you can have a look at a nice website called Blitzortung.

And this is dealing with radio waves travelling at the speed of light rather than sound.

In a very succint way, sensors receive and timestamp the radio signals originated by thunderstorms and send the timestamped information to a central server where the positions of the discharges are computed.

Especially the real time map is spectacular.

https://map.blitzortung.org/#1/17.6/8

And, by the way, this website can be incredibly useful for outdoors shots, etc. -

18 hours ago, Wandering Ear said:

I believe the micplexer filter is 5 or 10Mhz narrower. I’m not 100% sure the filter was my issue, the behavior of the system acted just like when you overload a receiver and attenuation was the solution. I’m also in a fairly mild rf environment at the moment with not much broadcasting except the local tv stations so it took me a minute to figure out why i was getting poor performance.

Then I doubt my explanation is the good one. Maybe the signal received by the Micplexer was too strong and it was overloading? That would be the simple explanation.

"My" theory would apply in a case in which the first element was amplifying a strong signal that would be rejected by the second filter. The impedance mismatch between the input of the second filter and the output of the first amplifier might cause overload on the amplifier, which would cause distortion.

But, was there a strong signal within that 5 or 10 MHz difference between the two filters?

Not intended as a interrogation or course!

-

20 hours ago, Wandering Ear said:

In my current setup I’m using Wisycom active antennas which have a bandpass filter and amplification/ attenuation built in. Those feed into a micplexer which also has a filter and amplifier with fixed gain.

I see. Anyway unless the active antenna is passing a strong interfering signal to the Mixplexer filter there should be no problem.

Is that the case? Is the Mixplexer filter narrower than the filter at the active antenna?

.png.279748a58a2b862b7aa5f3b84126e232.png)

Rode mics

in Equipment

Posted

I understand his frustration with overly enthusiastic marketing departments, but he doesn't seem to understand very well how digital audio works.

Dynamic range (let's say, macrodynamics) is different from resolution. When he says his voice can be recorded with 8 bits I think he is talking about envelopes, range of amplitudes, not failthful audio recording. So much for 8 bit audio, even good old digital telephone audio used 12 bits! (Yes, non linear, A or µ law but it's still 12).

Resolution is related to S/N ratio because low resolution means quantization noise. So when you record you aim to make a good use of the A/D dynamic range leaving some headroom to prevent clipping.

Of course everyone here knows that there is a chain of elements with different dynamic ranges involved in audio recording.

Air: Yes, it can become non linear for loud enough levels! (*)

Microphone

Preamp

ADC.

And turns out many beginners (for whom many Rode products are aimed!) tend to fail setting up recording levels and clip. So, what's wrong with making their lives easier?

As for controlling all of the circumstances or not, well, it depends. For people shooting guerrilla style, or documentaries, or even doing nature recordings, all kinds of unpredictable stuff can happen! Imagine you are recording a distant bird (with lots of preamp gain!) and suddenly an interesting bird comes close and sings.

So yes, it won't capture 32 bit audio. Fine. But it will be much more lenient with recording levels. So what's wrong?

Youtube videos. UGH!